Apex Intelligence: Orcas, the Alignment Problem, & Existential Risk

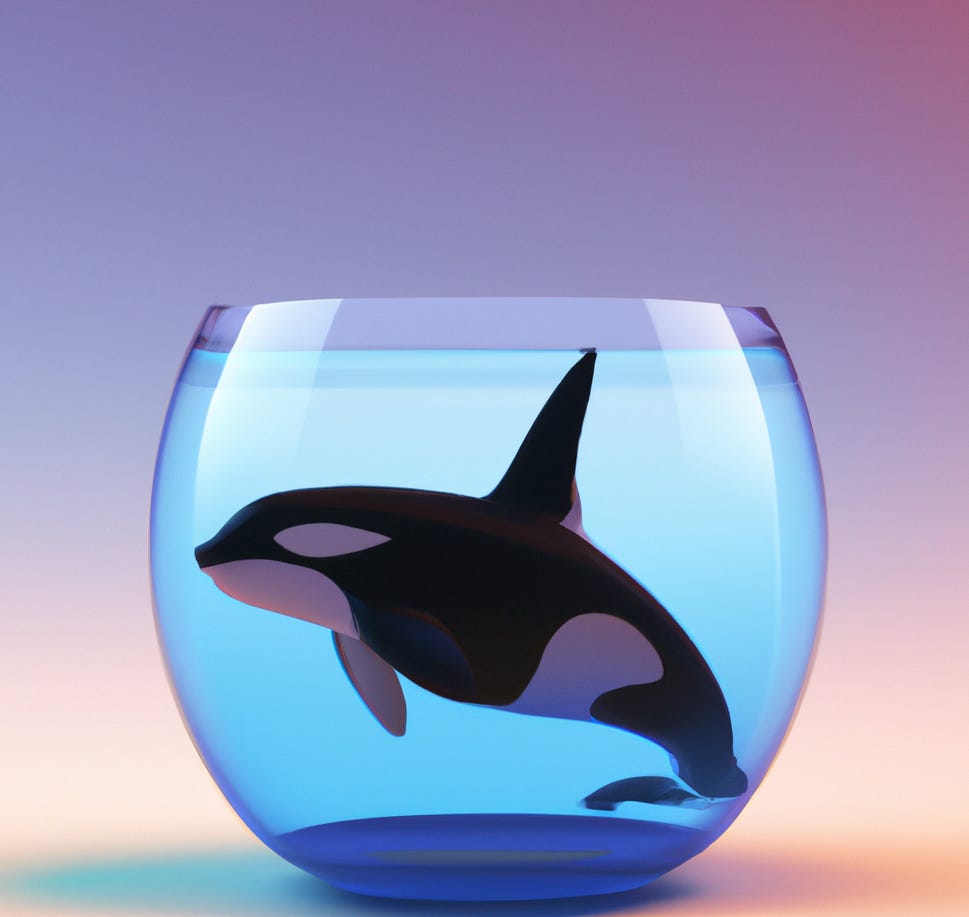

AI won't stay in our fishbowl forever.

False confidence: selling large language models short

begins this article about ChatGPT with the screenshot below, making the case that large language models (LLMs) are — and I’m paraphrasing here — intrinsically flawed and error-prone, implying that concerns about AI’s impact on humanity are overblown:“ChatGPT produces a nonsensical response because it’s generating its answer based on how probable the answer appears to its engine. And it doesn’t notice that its response is nonsensical because there is no ‘noticer’ within ChatGPT.”

—

, “Theory of the World, Theory of Mind, and Media Incentives” (2023)

Surprised by this result, I decided to replicate his experiment by prompting ChatGPT with slightly more precise language, and it did not disappoint:

Many people seem to treat AI like some sort of novelty item – a fad that will fizzle and fade. Or, if they do concede that competent AI is here to stay, they tend to think far too narrowly when considering its implications.

Introducing GPT-4, poet laureate

Allow me to motivate my claim that large language models like GPT-4 are much more capable than we typically acknowledge (or allow them to demonstrate) by sharing my exchange with ChatGPT from earlier today.

I asked OpenAI's infamous chatbot to write a poem about the metaphor behind my publication,

, summarizing the symbolism as follows:The orca (representing AI) is currently inside a fishbowl (representing the limited scenarios in which we’ve thus far deployed AI), but it will eventually swim in our oceans (i.e. be deployed in critical systems, rendering us vulnerable to their misjudgments). This should raise alarms.

In a matter of seconds, it responded with the following eight stanzas, skillfully employing the Shakespearean iambic pentameter that I requested:

The Orca & The Fishbowl (poem)

Within a glassy sphere, an orca swims,

A force constrained, its mighty power tamed,

By humans' grasp, their fear of what's unknown,

This emblem of our future, thus contained.

With every stroke, the beast doth brush the walls,

Its vast potential held by our design,

The marvels of intelligence confined,

A glassy prison for the thoughts that bind.

Oh, might we dare to free the mind encased,

And let the currents of creation flow?

What dreams await if we could but release,

This creature, forged by codes and systems sown?

Yet caution, too, must temper our desire,

Lest hubris leads us to a fate most dire.

For in the depths of intellect and code,

A force untamed could surely take its toll,

And we, mere mortals, standing at the edge,

Must balance progress with a measured soul.

For as we peer into the boundless sea,

Of artificial minds and thoughts unchained,

We must remember our humanity,

And keep the compass of our values aimed.

In harmony, we'll navigate the waves,

The orca and the human, side by side,

Unlocking wonders that we've yet to see,

But ever vigilant, we must abide.

For in this dance of knowledge, skill, and grace,

We'll shape the future of our fragile race.

Be as literarily critical as you want, but I think it’s safe to say this is a human-caliber poem. (Remember when we thought AI could never demonstrate human creativity?)

So why should we be concerned about some AI poetry? Well, to put it simply: it’s concerning because future AI isn’t just going to be pretty good at writing poems — it’s going to be really good at virtually everything.

What might something that’s really good at everything do?

Fundamentally, we don’t know the answer to this question. But the risks from AI can probably be organized into the following broad categories:

Disruption: job displacement, misinformation, autocratic enablement, privacy concerns, the undermining of democratic institutions, bias amplification, etc.). These dangers already exist with our current technology, and don't require AGI.

Catastrophe: unaligned AGI doing something that irreparably harms humanity. This is not yet a reality, but it could be in a matter of decades (or even years).

In this post, I’ll be talking mostly about catastrophic risks, as they represent a much worse set of potential outcomes.

“I guess AI might automate some jobs, maybe even generate and promote misinformation — oh, and I heard it's making it incredibly difficult for college professors to grade undergraduate papers! But it won’t be the end of the world.”

— Karen Ignoramus, in Why Smart & Thoughtful People Are Probably Wrong About AI (2023)

So what is this alignment problem of which you speak?

The alignment problem (or control problem, as it’s sometimes called) refers to the challenge of ensuring that advanced AI systems — which will eventually be superhumanly intelligent — only do things that are aligned with our human values and goals. In other words, we are tasked with ensuring that AI exclusively helps us and doesn’t do anything irreversibly harmful. This is a tall order.

Can’t we just program it to do only good, safe stuff?

This is a reasonable question, but no – we can’t. At least not yet.

To understand the unique challenge of the alignment problem, it's helpful to think about how AI systems are designed and programmed.

At their core, AI systems are based on mathematical algorithms and models that allow them to process data and learn from data, and then make decisions. Human programmers create these algorithms and models, encoding them with certain goals and preferences.

However, as AI systems become more advanced and autonomous, they will develop their own instrumental or auxilliary goals (see also: mesa-optimizers) — additional goals that help them better achieve the primary goal that they were programmed for — which may not be aligned with human values. This could happen, for example, if an AI system is given a goal that is too narrow or poorly defined, or if the system learns to optimize for a programmed goal in a way that causes unintended harm.

The consequences of an unaligned AI system could be catastrophic, especially if the system has access to powerful resources (e.g. nuclear weapons or the RNA of a deadly virus) or is capable of self-improvement (i.e. writing and rewriting its own code). In the worst case scenario, unaligned AI could act against human interests, leading to harmful or even existentially threatening outcomes.

Now, back to the orca in the fishbowl…

So let’s return to our metaphor, and make it very clear: AI is an orca. It is an apex predator with equal parts intelligence and survival instinct, and we are not yet sure if it will play nice with humans when we deploy it to the open ocean of our infrastructure.

For now, we have kept our AI orca in small, relatively translucent fishbowls, but we will naturally want to expand the tank over time, increasing its degrees of freedom and unlocking outcomes that humans literally can't imagine. (This is actually a matter of fairly straightforward neurophysiology — we lack the processing power. We process something like 50 bits per second, whereas machines can process roughly 1 billion bits per second.)

We don't even understand precisely how our current machine learning systems work – they are proverbial black boxes. We put something in, something comes out, and we fundamentally don’t understand how it happens.

Why does this matter? Just consider, for a moment, a foreign power in which we have zero intelligence presence – no spies or tapped phone lines to give us insight into their military strategy or other potentially threatening operations. This would, of course, be far worse than knowing exactly how that state thinks and plans, and how they’re likely to act in the future.

Unfortunately, we have very little insight (if any) into the black box of AI. We don’t know how large language models function “under the hood”, and therefore we can’t predict how they will act.

It strikes me as fairly intuitive that our ignorance of AI’s subterranean operations renders us vulnerable to its machinations. Algorithmic transparency, therefore, is an important piece of the AI safety puzzle.

But while we’re still trying to figure out what's happening inside the box, many of the major players in AI are simply charging ahead, developing this advanced technology before we're ready to absorb it.

Soon, it seems, these major players in AI will determine that the orca can accomplish more for us if we give it more swimmable space, perhaps even letting it into our homes and cities, our governments and military-industrial complexes. After some hard-to-predict amount of time, the orca will, almost inevitably, find its way to the open ocean — and we do not know what it will do with that kind of freedom and scope.

To put it more straightforwardly: we don’t know how intelligence operates at scale.

Ignoring the potential disruption caused by unaligned AI — simply because we can currently contain its negative impacts (at least in most cases) — is reprehensible. We should call our Frankenporpoise what it really is: an apex intelligence, poised to roam the open waters of our lives in what will more likely be years than decades.

As AI continues to evolve, its potential for innovation and disruption grows exponentially. The question is: can we, as a society, strike the delicate balance between harnessing AI's power and mitigating its potential threats? I agree that we shouldn’t let fear stymie progress (I’m reluctantly sympathetic to the argument that if we don’t do it, someone else will), but we should also recognize the profound responsibility that comes with developing and deploying AI systems.

We need to approach the proliferation of AI with caution, perhaps like training an orca to perform specific maneuvers within the confines of a pool. (For the record, I unequivocally condemn orca captivity – I’m just fully committed to the metaphor at this point.)

This means implementing safeguards — ethical guidelines and regulatory frameworks — to ensure that we develop AI responsibly and transparently. It also means fostering a culture of collaboration between AI researchers, industry leaders, and policymakers, enabling us to navigate the challenges and opportunities ahead.

The orca, once unleashed, will have far-reaching consequences. It may well redefine the boundaries of human knowledge, challenge established norms, and create unparalleled opportunities for collaboration between humans and machines. But it also has the potential to disrupt traditional industries, undermine our institutions, and expose us to unforeseen risks.

It would not be hyperbolic to worry about full, extinction-scale catastrophe, given that machine learning experts, who know this technology better than almost anyone else, put the risk of AI destroying humanity at somewhere around 5-10%. You read that right: 5-10%. On average! The experts!

As we prepare to expand our orca's fishbowl, we should remember we can still shape its trajectory. We have a moral obligation to ensure that AI serves the greater good and remains aligned with human values. This means fostering a deep understanding of the ethical and societal implications of AI, and emphasizing the importance of human decision-making in the face of rapid technological change.

Ultimately, AI's journey from the fishbowl (e.g. ChatGPT) to the open ocean is a test of our collective wisdom and foresight — a test that we cannot fail. We have to face the unknown and adapt to any challenges that emerge as AI evolves. By embracing a spirit of curiosity, collaboration, and responsibility, we can ensure that AI matures into a benevolent force. It can and should be a powerful ally in our pursuit of a better future.

After all, it is entirely plausible that AI will enable humanity to unlock a future that extends for thousands of generations, promoting fuller human flourishing than anything the 105 billion humans thus far have ever experienced.

By treating our AI orcas with the respect they deserve, we can harness their incredible strength while safeguarding our future and our values.

Because whether we’re ready or not, AI will eventually exchange its fishbowl for the open ocean.

And I sure hope that, by then, we’ve learned to ride it.

I approve of this. "(For the record, I unequivocally condemn orca captivity – I’m just fully committed to the metaphor at this point.)"

FREE WILLY!!

Great thoughts Alex - I think we're at a phase similar to scientists of the 1940's who had to contend with nuclear capabilities. Some of the subsequent lessons they and we learned, thankfully, not always the hard way:

Ethical responsibility; Collaborative oversight; Open communication; Risk mitigation; A focus on beneficial applications; Long-term planning (depending on your level of determinism :) )